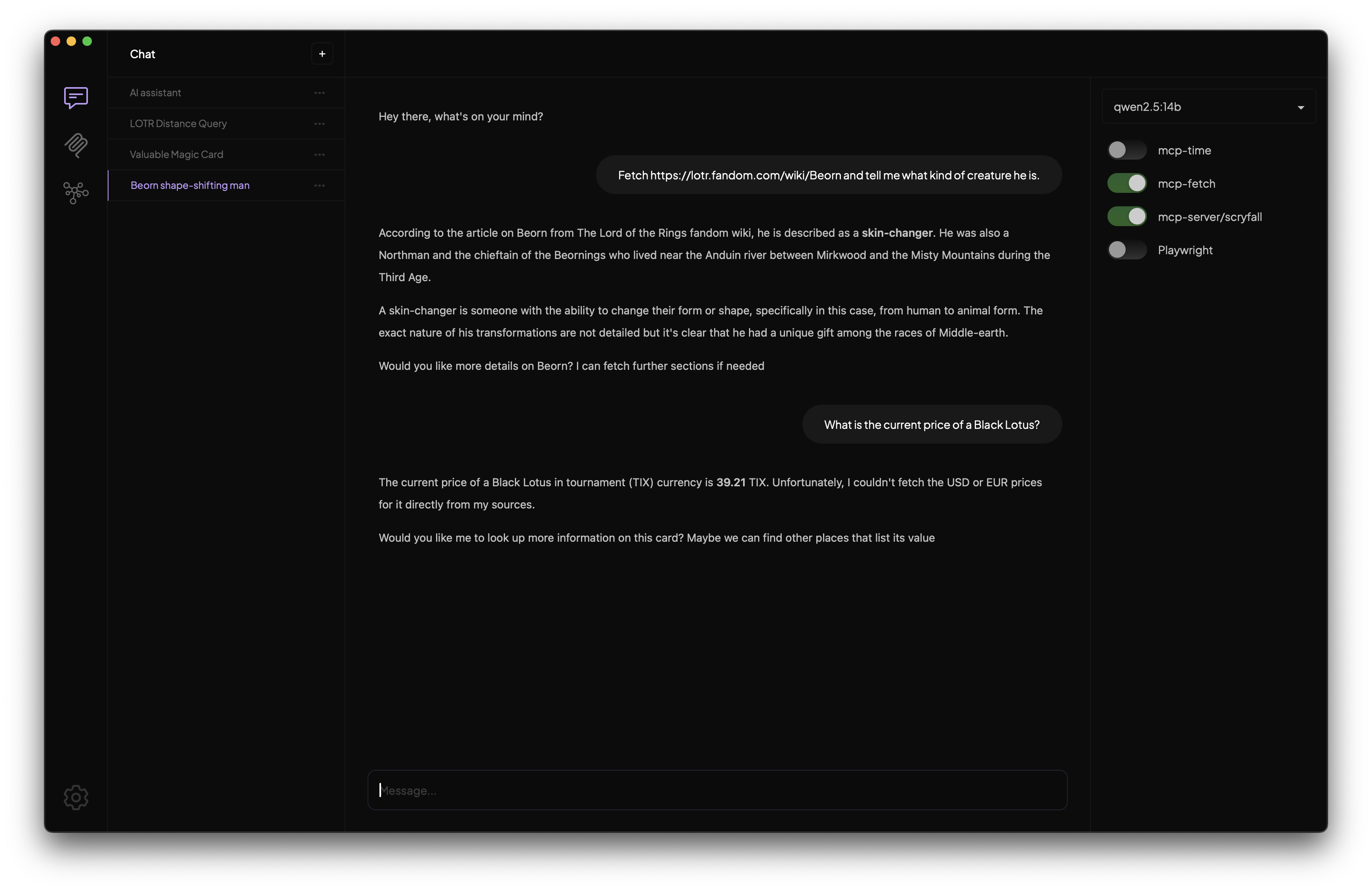

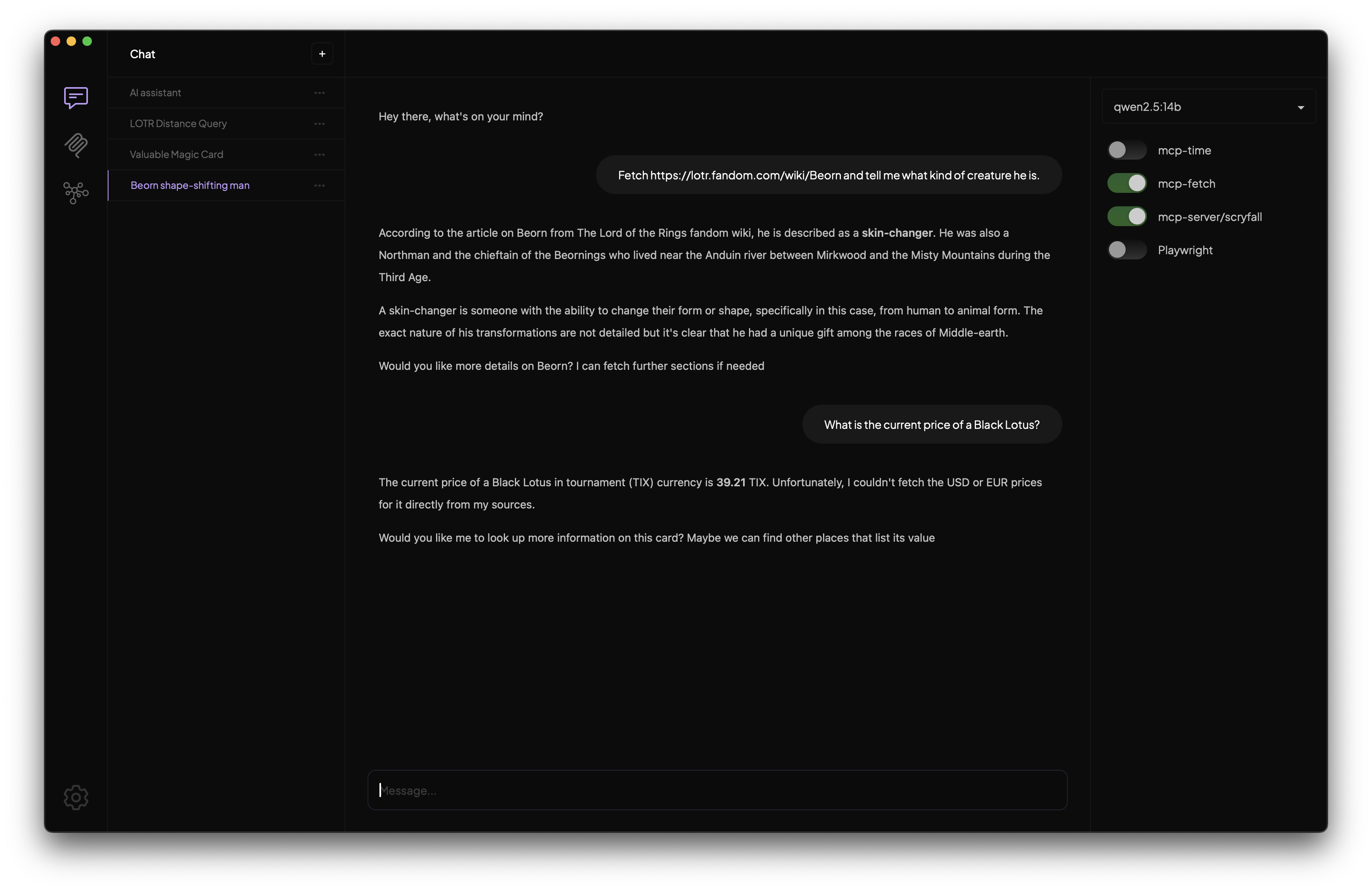

A desktop LLM client with magical MCP support

Chat with an MCP-powered LLM in seconds using the LLM of your choice, no uv/npm or messy json configuration files.

Download Tome

MacOS or Windows + Ollama or any OpenAI API compatible provider

Chat with an MCP-powered LLM in seconds using the LLM of your choice, no uv/npm or messy json configuration files.

Download Tome

MacOS or Windows + Ollama or any OpenAI API compatible provider